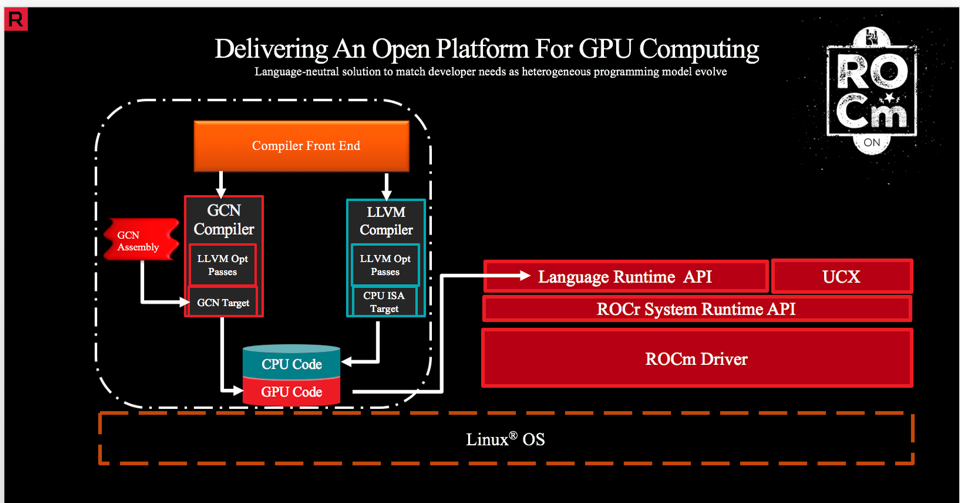

Interaction of Tensorflow and Keras with GPU, with the help of CUDA and... | Download Scientific Diagram

Interaction of Tensorflow and Keras with GPU, with the help of CUDA and... | Download Scientific Diagram

Using the Python Keras multi_gpu_model with LSTM / GRU to predict Timeseries data - Data Science Stack Exchange

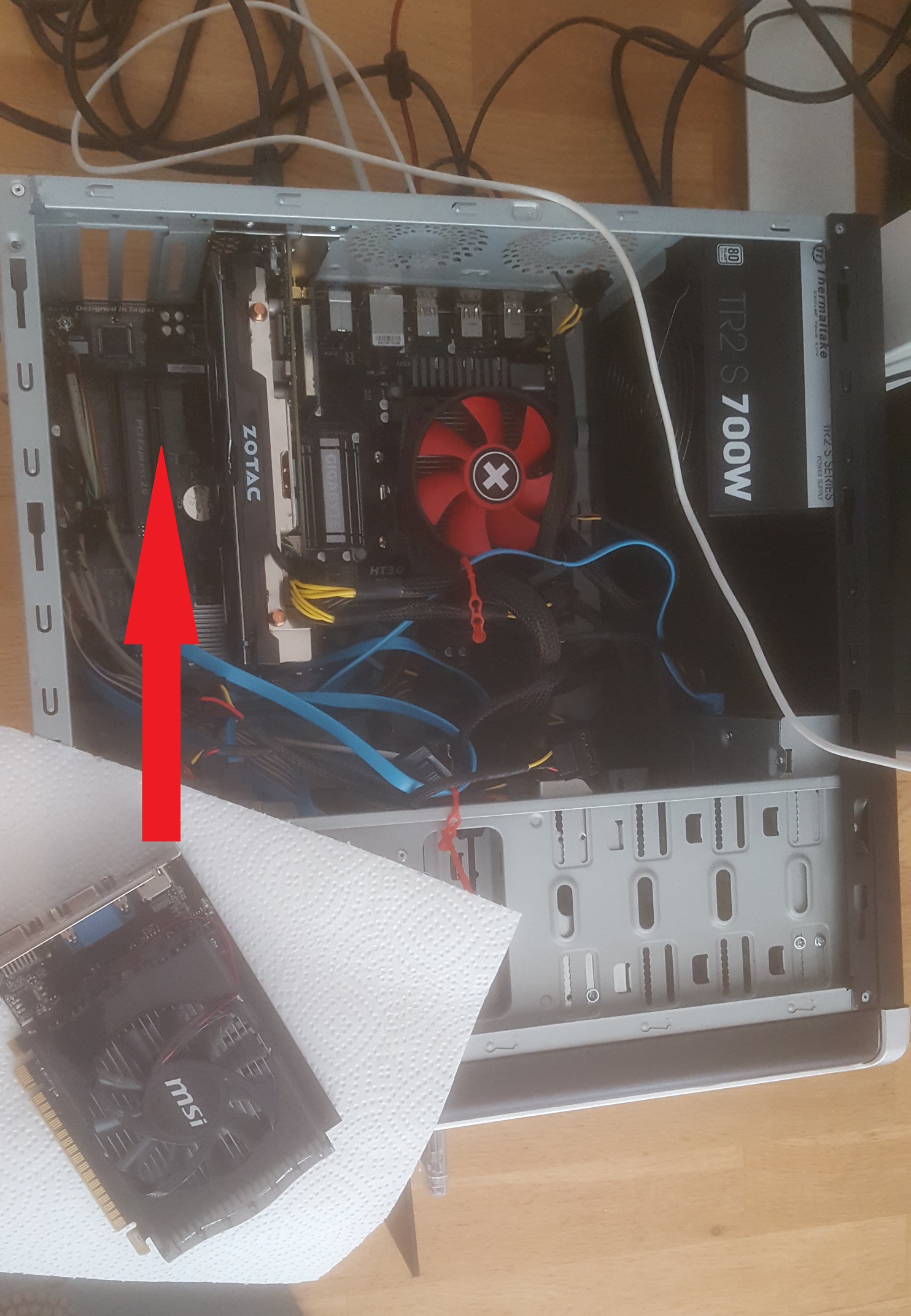

Howto Install Tensorflow-GPU with Keras in R - A manual that worked on 2021.02.20 (and likely will work in future)